Tirei férias longas demais. Na verdade foram apenas 3 semanas, mas eu meio que deixei de postar aqui durante o período de férias. E a procrastinação voltou forte. Então aqui vamos nós com uma tentativa de voltar a escrever semanalmente.

Hoje, fazendo um troubleshooting the um serviço que não funcionava como devia (um scan de aplicação com o OWASP ZAP), descobri que meus containers em docker não estavam acessando a rede. O que mudou? Minha máquina de trabalho é um Ubuntu 18.04. O repositório bionic-update trouxe uma versão nova do docker que reiniciou o daemon, mas... a parte de rede não funcionando. E só percebi isso hoje.

root@dell-latitude-7480 /u/local# apt show docker.io Package: docker.io Version: 20.10.7-0ubuntu1~18.04.1 Built-Using: glibc (= 2.27-3ubuntu1.2), golang-1.13 (= 1.13.8-1ubuntu1~18.04.3) Priority: optional Section: universe/admin Origin: Ubuntu Maintainer: Ubuntu Developers <This email address is being protected from spambots. You need JavaScript enabled to view it. > Original-Maintainer: Paul Tagliamonte <This email address is being protected from spambots. You need JavaScript enabled to view it. > Bugs: https://bugs.launchpad.net/ubuntu/+filebug Installed-Size: 193 MB Depends: adduser, containerd (>= 1.2.6-0ubuntu1~), iptables, debconf (>= 0.5) | debconf-2.0, libc6 (>= 2.8), libdevmapper1.02.1 (>= 2:1.02.97), libsystemd0 (>= 209~) Recommends: ca-certificates, git, pigz, ubuntu-fan, xz-utils, apparmor Suggests: aufs-tools, btrfs-progs, cgroupfs-mount | cgroup-lite, debootstrap, docker-doc, rinse, zfs-fuse | zfsutils Breaks: docker (<< 1.5~) Replaces: docker (<< 1.5~) Homepage: https://www.docker.com/community-edition Download-Size: 36.9 MB APT-Manual-Installed: yes APT-Sources: mirror://mirrors.ubuntu.com/mirrors.txt bionic-updates/universe amd64 Packages Description: Linux container runtime Docker complements kernel namespacing with a high-level API which operates at the process level. It runs unix processes with strong guarantees of isolation and repeatability across servers. . Docker is a great building block for automating distributed systems: large-scale web deployments, database clusters, continuous deployment systems, private PaaS, service-oriented architectures, etc. . This package contains the daemon and client. Using docker.io on non-amd64 hosts is not supported at this time. Please be careful when using it on anything besides amd64. . Also, note that kernel version 3.8 or above is required for proper operation of the daemon process, and that any lower versions may have subtle and/or glaring issues. N: There is 1 additional record. Please use the '-a' switch to see it

Primeira coisa que tentei foi reiniciar o docker mesmo.

root@dell-latitude-7480 /u/local# systemctl restart --no-block docker; journalctl -u docker -f [...] Aug 12 10:29:25 dell-latitude-7480 dockerd[446605]: time="2021-08-12T10:29:25.203367946+02:00" level=info msg="Firewalld: docker zone already exists, returning" Aug 12 10:29:25 dell-latitude-7480 dockerd[446605]: time="2021-08-12T10:29:25.549158535+02:00" level=warning msg="could not create bridge network for id 88bd200

b5bb27d3fd10d9e8bf86b1947b2190cf7be36cd7243eec55ac8089dc6 bridge name docker0 while booting up from persistent state: Failed to program NAT chain:

ZONE_CONFLICT: 'docker0' already bound to a zone" Aug 12 10:29:25 dell-latitude-7480 dockerd[446605]: time="2021-08-12T10:29:25.596805407+02:00" level=info msg="stopping event stream following graceful shutdown"

error="" module=libcontainerd namespace=moby Aug 12 10:29:25 dell-latitude-7480 dockerd[446605]: time="2021-08-12T10:29:25.596994440+02:00" level=info msg="stopping event stream following graceful shutdown"

error="context canceled" module=libcontainerd namespace=plugins.moby Aug 12 10:29:25 dell-latitude-7480 dockerd[446605]: failed to start daemon: Error initializing network controller: Error creating default "bridge" network:

Failed to program NAT chain: ZONE_CONFLICT: 'docker0' already bound to a zone Aug 12 10:29:25 dell-latitude-7480 systemd[1]: docker.service: Main process exited, code=exited, status=1/FAILURE Aug 12 10:29:25 dell-latitude-7480 systemd[1]: docker.service: Failed with result 'exit-code'. Aug 12 10:29:25 dell-latitude-7480 systemd[1]: Failed to start Docker Application Container Engine. Aug 12 10:29:27 dell-latitude-7480 systemd[1]: docker.service: Service hold-off time over, scheduling restart. Aug 12 10:29:27 dell-latitude-7480 systemd[1]: docker.service: Scheduled restart job, restart counter is at 3. Aug 12 10:29:27 dell-latitude-7480 systemd[1]: Stopped Docker Application Container Engine. Aug 12 10:29:27 dell-latitude-7480 systemd[1]: docker.service: Start request repeated too quickly. Aug 12 10:29:27 dell-latitude-7480 systemd[1]: docker.service: Failed with result 'exit-code'. Aug 12 10:29:27 dell-latitude-7480 systemd[1]: Failed to start Docker Application Container Engine.

As linhas estão editadas pra facilitar a visualização uma vez que o systemd usa linhas bem maiores que 120 colunas. Mas o resultado foi... falha.

Parando o firewalld e somente reiniciando docker levava a uma condição em que o daemon iniciava, mas ao iniciar o container, novamente ficava sem acesso à rede.

root@dell-latitude-7480 /u/local# docker run -it --rm --init ubuntu:20.04 bash root@f45dcbb1ecaa:/# ping 1.1.1.1 PING 1.1.1.1 (1.1.1.1) 56(84) bytes of data. ^C --- 1.1.1.1 ping statistics --- 6 packets transmitted, 0 received, 100% packet loss, time 5153ms root@f45dcbb1ecaa:/# exit

Olhando somente as regras do firewall eu pude ver que realmente o docker estava carregando a regra correta sem o firewalld:

root@dell-latitude-7480 /u/local# systemctl stop firewalld.service

root@dell-latitude-7480 /u/local# iptables -L -n -t nat

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

root@dell-latitude-7480 /u/local# systemctl restart docker

root@dell-latitude-7480 /u/local# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled)

Active: active (running) since Thu 2021-08-12 12:01:12 CEST; 4s ago

Docs: https://docs.docker.com

Main PID: 484649 (dockerd)

Tasks: 27

CGroup: /system.slice/docker.service

└─484649 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.061383466+02:00" level=warning msg="Your kernel does not support swap

memory limit"

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.061414030+02:00" level=warning msg="Your kernel does not support CPU

realtime scheduler"

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.061421558+02:00" level=warning msg="Your kernel does not support cgroup

blkio weight"

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.061427194+02:00" level=warning msg="Your kernel does not support cgroup

blkio weight_device"

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.061796106+02:00" level=info msg="Loading containers: start."

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.531851162+02:00" level=info msg="Loading containers: done."

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.549979768+02:00" level=info msg="Docker daemon"

commit="20.10.7-0ubuntu1~18.04.1" graphdriver(s)=overlay2 version=20.10.7

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.550057275+02:00" level=info msg="Daemon has completed initialization"

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.558188106+02:00" level=info msg="API listen on /var/run/docker.sock"

Aug 12 12:01:12 dell-latitude-7480 systemd[1]: Started Docker Application Container Engine.

root@dell-latitude-7480 /u/local# iptables -L -n -t nat

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

DOCKER all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

DOCKER all -- 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE all -- 172.16.0.0/24 0.0.0.0/0

Chain DOCKER (2 references)

target prot opt source destination

RETURN all -- 0.0.0.0/0 0.0.0.0/0

Claramente existia uma regra de MASQUERADE vinda da rede do docker (172.16.0.0/24). E o firewalld estava sumindo com essa regra ao ser ativado (pra ficar menos poluído com várias regras peguei só a saída da cadeia do POSTROUTING.

root@dell-latitude-7480 /u/local# systemctl start firewalld.service root@dell-latitude-7480 /u/local# iptables -L POSTROUTING -n -t nat Chain POSTROUTING (policy ACCEPT) target prot opt source destination POSTROUTING_direct all -- 0.0.0.0/0 0.0.0.0/0 POSTROUTING_ZONES_SOURCE all -- 0.0.0.0/0 0.0.0.0/0 POSTROUTING_ZONES all -- 0.0.0.0/0 0.0.0.0/0

A minha primeira ideia: inserir à força uma regra de MASQUERADE direto na cadeia de POSTROUTING.

root@dell-latitude-7480 /u/local# iptables -I POSTROUTING 1 -s 172.16.0.0/24 -j MASQUERADE -t nat root@dell-latitude-7480 /u/local# iptables -L POSTROUTING --line-numbers -t nat Chain POSTROUTING (policy ACCEPT) num target prot opt source destination 1 MASQUERADE all -- 172.16.0.0/24 anywhere 2 POSTROUTING_direct all -- anywhere anywhere 3 POSTROUTING_ZONES_SOURCE all -- anywhere anywhere 4 POSTROUTING_ZONES all -- anywhere anywhere

E, claro, não deu certo.

Depois de procurar na Internet sobre docker e firewalld, encontrei o próprio site do Docker explicando como fazer isso em https://docs.docker.com/network/iptables/ com o seguinte comando:

# Please substitute the appropriate zone and docker interface $ firewall-cmd --zone=trusted --remove-interface=docker0 --permanent $ firewall-cmd --reload

Beleza. Agora não teria como dar errado. E...

root@dell-latitude-7480 /u/local# firewall-cmd --get-zone-of-interface=docker0 public root@dell-latitude-7480 /u/local# firewall-cmd --zone=public --remove-interface=docker0 --permanent The interface is under control of NetworkManager and already bound to the default zone The interface is under control of NetworkManager, setting zone to default. success root@dell-latitude-7480 /u/local# systemctl start docker Job for docker.service failed because the control process exited with error code. See "systemctl status docker.service" and "journalctl -xe" for details.

Caramba... algo de errado não estava certo. Bom... se tivesse funcionado de primeira, eu provavelmente não teria escrito esse artigo.

Então vamos rever em qual zona está a interface docker0, remover essa interface dessa zona e adicionar na zona do docker.

root@dell-latitude-7480 /u/local# firewall-cmd --get-zone-of-interface=docker0 public root@dell-latitude-7480 /u/local# firewall-cmd --zone=public --remove-interface=docker0 --permanent The interface is under control of NetworkManager and already bound to the default zone The interface is under control of NetworkManager, setting zone to default. success root@dell-latitude-7480 /u/local# firewall-cmd --get-zone-of-interface=docker0 public root@dell-latitude-7480 /u/local# firewall-cmd --reload success root@dell-latitude-7480 /u/local# firewall-cmd --get-zone-of-interface=docker0 public

Mas que catzo... esse foi problema que encontrei. Por mais que eu removesse ou tentasse remover a interface docker0 da zone public, sempre voltava.

Foram algumas horas nesse vai e vem, procurando na Internet o que fazer, lendo documentação do firewalld, até que finalmente acertei.

root@dell-latitude-7480 /u/local# firewall-cmd --zone=docker --add-interface=docker0 --permanent The interface is under control of NetworkManager, setting zone to 'docker'. success root@dell-latitude-7480 /u/local# firewall-cmd --get-zone-of-interface=docker0 docker root@dell-latitude-7480 /u/local# firewall-cmd --reload success root@dell-latitude-7480 /u/local# systemctl start docker

Então não precisava do comando pra remover. Apenas adicionar diretamente na zona desejada.

root@dell-latitude-7480 /u/local# docker run -it --rm --init ubuntu:20.04 bash root@e5d78d7f081b:/# ping -c 5 1.1.1.1 PING 1.1.1.1 (1.1.1.1) 56(84) bytes of data. 64 bytes from 1.1.1.1: icmp_seq=1 ttl=58 time=1.95 ms 64 bytes from 1.1.1.1: icmp_seq=2 ttl=58 time=2.02 ms 64 bytes from 1.1.1.1: icmp_seq=3 ttl=58 time=1.68 ms 64 bytes from 1.1.1.1: icmp_seq=4 ttl=58 time=1.62 ms 64 bytes from 1.1.1.1: icmp_seq=5 ttl=58 time=1.76 ms --- 1.1.1.1 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4003ms rtt min/avg/max/mdev = 1.621/1.808/2.026/0.162 ms root@e5d78d7f081b:/# exit

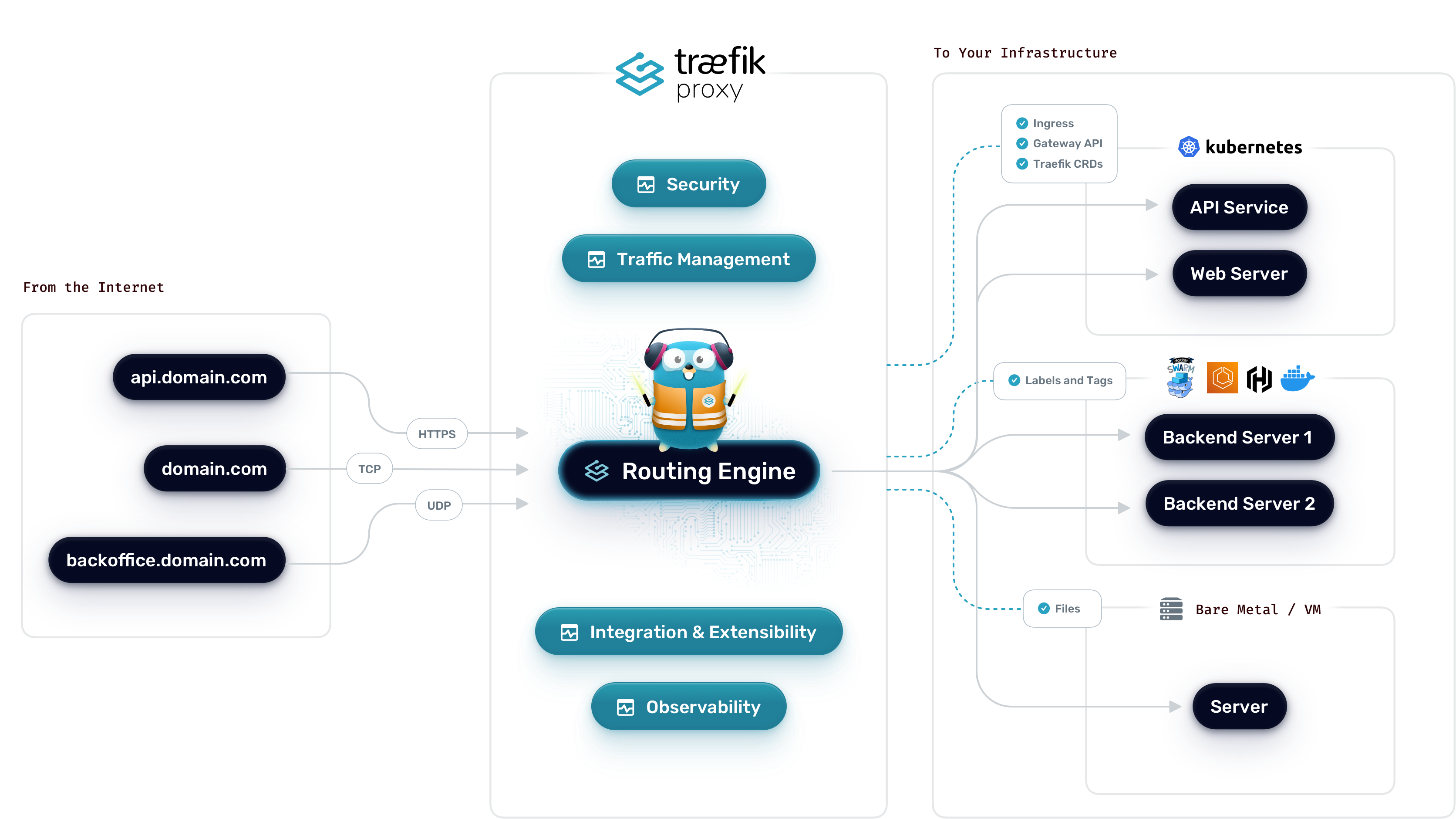

Esse parece ser um tópico um tanto quanto... distópico? Talvez nem tanto. Mas todas as referências sobre o assunto na Internet estão incompletos e geralmente são pra versões mais antigas do traefik. Traefik é um roteador de conexões que funciona tanto como programa chamado por systemd (ou outro sistema de init se for um BSD), ou por container com algo como docker-compose, ou ainda diretamente em kubernetes. E não só funciona como proxy-reverse: pode também atuar como um servidor http pra suas conexões. E não somente tcp como udp. E escrito em Go!

Eu precisei colocar um servidor ssh atrás de um traefik. E deu um certo trabalho. Pra testar e mostrar aqui também, usei docker-compose pro serviço. Isso facilitou a configuração. Pra backend com ssh, subi uma instância do gitea com o ssh na porta 2222. Usei também certificados letsencrypt nas portas https do serviço.

Como um plus, ainda adicionei um allowlist pra acessar o serviço somente de certos IPs.

Vamos pra configuração então. Primeiramente do traefik.

Pra subir, eu configurei pra ouvir nas portas 80 (http), 443 (https), 8080 (traefik dashboard) e 2222 (ssh).

O conteúdo de traefik/docker-compose.yml:

services:

traefik:

image: "traefik:v3.3"

container_name: "traefik"

restart: unless-stopped

environment:

- TZ=Europe/Stockholm

ports:

- "80:80"

- "443:443"

- "8080:8080"

- "2222:2222"

volumes:

- "./letsencrypt:/letsencrypt"

- "/var/run/docker.sock:/var/run/docker.sock:ro"

- "./traefik.yml:/traefik.yml:ro"

networks:

- traefik

networks:

traefik:

name: traefik

Junto com essa configuração de container, ainda inclui a configuração do serviço em traefik/traefik.yml:

api:

dashboard: true

insecure: true

debug: true

entryPoints:

web:

address: ":80"

http:

redirections:

entryPoint:

to: websecure

scheme: https

websecure:

address: ":443"

ssh:

address: ":2222"

serversTransport:

insecureSkipVerify: true

providers:

docker:

endpoint: "unix:///var/run/docker.sock"

exposedByDefault: false

network: traefik

certificatesResolvers:

letsencrypt:

acme:

email: This email address is being protected from spambots. You need JavaScript enabled to view it.

storage: /letsencrypt/acme.json

httpChallenge:

# used during the challenge

entryPoint: web

log:

level: WARN

Aqui é possível ver que defino novamente os entrypoints pra 80, 443 e 2222. E no 80 (http) eu redireciono pro 443 (https). E certificado do letsencrypt.

Tudo pronto no lado do traefik. Agora é o ponto de subir o serviço e preparar algumas coisas pra testar.

# docker compose -p traefik -f traefik/docker-compose.yml up -d

[+] Running 1/1

✔ Container traefik Started 0.4s

Dentro do diretório traefik será criado um diretório letsencrypt e dentro dele terá o arquivo acme.json com seus certificados.

Primeiramente vamos subir um container de teste pra ver se tudo funciona.

O próprio projeto traefik fornece um container chamado whoami pra isso.

O conteúdo de whois/docker-compose.yml:

services:

whoami:

container_name: simple-service

image: traefik/whoami

labels:

- "traefik.enable=true"

- "traefik.http.routers.whoami.rule=Host(`whoami.tests.loureiro.eng.br`)"

- "traefik.http.routers.whoami.entrypoints=websecure"

- "traefik.http.routers.whoami.tls=true"

- "traefik.http.routers.whoami.tls.certresolver=letsencrypt"

- "traefik.http.services.whoami.loadbalancer.server.port=80"

networks:

- traefik

networks:

traefik:

name: traefik

E habilitando o serviço com docker-compose:

# docker compose -p whoami -f whoami/docker-compose.yml up -d

WARN[0000] a network with name traefik exists but was not created for project "whoami".

Set `external: true` to use an existing network

[+] Running 1/1

✔ Container simple-service Started 0.3s

E um simples teste do serviço:

❯ curl -I http://whoami.tests.loureiro.eng.br

HTTP/1.1 308 Permanent Redirect

Location: https://whoami.tests.loureiro.eng.br/

Date: Thu, 24 Apr 2025 12:43:55 GMT

Content-Length: 18

❯ curl https://whoami.tests.loureiro.eng.br

Hostname: 0da540e1039c

IP: 127.0.0.1

IP: ::1

IP: 172.18.0.3

RemoteAddr: 172.18.0.2:52686

GET / HTTP/1.1

Host: whoami.tests.loureiro.eng.br

User-Agent: curl/8.5.0

Accept: */*

Accept-Encoding: gzip

X-Forwarded-For: 1.2.3.4

X-Forwarded-Host: whoami.tests.loureiro.eng.br

X-Forwarded-Port: 443

X-Forwarded-Proto: https

X-Forwarded-Server: 4cacedb0129a

X-Real-Ip: 1.2.3.4

O primeiro curl pro endpoint na porta 80 (http) recebe um redirect pra https.

Perfeito!

O segundo, os dados do container de forma transparente.

O serviço funciona! Mas pra http e https. Falta ssh na porta 2222, que está nessa porta pra não atrapalhar o uso do ssh normal do sistema.

Pra isso eu configurei um gitea, como descrevi no início do artigo.

Seu docker-compose.yml é o seguinte:

services:

server:

image: gitea/gitea:1.23-rootless #latest-rootless

container_name: gitea

environment:

- GITEA__database__DB_TYPE=postgres

- GITEA__database__HOST=db:5432

- GITEA__database__NAME=gitea

- GITEA__database__USER=gitea

- GITEA__database__PASSWD=A4COU5a6JF5ZvWoSufi0L1aomSkzqww7s1wv039qy6o=

- LOCAL_ROOT_URL=https://gitea.tests.loureiro.eng.br

- GITEA__openid__ENABLE_OPENID_SIGNIN=false

- GITEA__openid__ENABLE_OPENID_SIGNUP=false

- GITEA__service__DISABLE_REGISTRATION=true

- GITEA__service__SHOW_REGISTRATION_BUTTON=false

- GITEA__server__SSH_DOMAIN=gitea.tests.loureiro.eng.br

- GITEA__server__START_SSH_SERVER=true

- GITEA__server__DISABLE_SSH=false

restart: always

volumes:

- gitea-data:/var/lib/gitea

- gitea-config:/etc/gitea

- /etc/timezone:/etc/timezone:ro

- /etc/localtime:/etc/localtime:ro

#ports:

# - "127.0.0.1:3000:3000"

# - "127.0.0.1:2222:2222"

depends_on:

- db

networks:

- traefik

labels:

- "traefik.enable=true"

- "traefik.http.routers.giteaweb.rule=Host(`gitea.tests.loureiro.eng.br`)"

- "traefik.http.routers.giteaweb.entrypoints=websecure"

- "traefik.http.routers.giteaweb.tls=true"

- "traefik.http.routers.giteaweb.tls.certresolver=letsencrypt"

- "traefik.http.services.giteaweb.loadbalancer.server.port=3000"

- "traefik.http.middlewares.giteaweb-ipwhitelist.ipallowlist.sourcerange=1.2.3.4, 4.5.6.7"

- "traefik.http.routers.giteaweb.middlewares=giteaweb-ipwhitelist"

- "traefik.tcp.routers.gitea-ssh.rule=HostSNI(`*`)"

- "traefik.tcp.routers.gitea-ssh.entrypoints=ssh"

- "traefik.tcp.routers.gitea-ssh.service=gitea-ssh-svc"

- "traefik.tcp.services.gitea-ssh-svc.loadbalancer.server.port=2222"

- "traefik.tcp.middlewares.giteassh-ipwhitelist.ipallowlist.sourcerange=1.2.3.4, 4.5.6.7"

- "traefik.tcp.routers.gitea-ssh.middlewares=giteassh-ipwhitelist"

db:

image: postgres:17

restart: always

container_name: gitea_db

environment:

- POSTGRES_DB=gitea

- POSTGRES_USER=gitea

- POSTGRES_PASSWORD=A4COU5a6JF5ZvWoSufi0L1aomSkzqww7s1wv039qy6o=

networks:

- traefik

volumes:

- gitea-db:/var/lib/postgresql/data

networks:

traefik:

name: traefik

volumes:

gitea-data:

gitea-config:

gitea-db:

Pode ser visto que existe uma regra pra parte web, que roda na porta 3000 do container, e pra parte de ssh, que fica na porta 22222. Eu deixei comentado a parte que exporta as portas pra mostrar claramente que isso não é necessário. Aliás não funciona se especificar as portas.

E finalmente rodando o serviço:

# docker compose -p gitea -f gitea/docker-compose.yml up -d

WARN[0000] a network with name traefik exists but was not created for project "gitea".

Set `external: true` to use an existing network

[+] Running 2/2

✔ Container gitea_db Started 0.3s

✔ Container gitea Started 1.0s

E vamos ao testes.

❯ curl -s https://gitea.tests.loureiro.eng.br | head -n 10

<!DOCTYPE html>

<html lang="en-US" data-theme="gitea-auto">

<head>

<meta name="viewport" content="width=device-width, initial-scale=1">

<title>Gitea: Git with a cup of tea</title>

<link rel="manifest" href="data:application/json;base64,eyJuYW1lIjoiR2l0ZWE6IEdpdCB3aXRoIGEgY3VwIG9mIHRlYSIsInNob3J0X25hbWUiOiJHaXRlYTogR2l0IHdpdGggYSBjdXAgb2YgdGVhIiwic3RhcnRfdXJsIjoiaHR0cDovL2xvY2FsaG9zdDozMDAwLyIsImljb25zIjpbeyJzcmMiOiJodHRwOi8vbG9jYWxob3N0OjMwMDAvYXNzZXRzL2ltZy9sb2dvLnBuZyIsInR5cGUiOiJpbWFnZS9wbmciLCJzaXplcyI6IjUxMng1MTIifSx7InNyYyI6Imh0dHA6Ly9sb2NhbGhvc3Q6MzAwMC9hc3NldHMvaW1nL2xvZ28uc3ZnIiwidHlwZSI6ImltYWdlL3N2Zyt4bWwiLCJzaXplcyI6IjUxMng1MTIifV19">

<meta name="author" content="Gitea - Git with a cup of tea">

<meta name="description" content="Gitea (Git with a cup of tea) is a painless self-hosted Git service written in Go">

<meta name="keywords" content="go,git,self-hosted,gitea">

<meta name="referrer" content="no-referrer">

❯ telnet gitea.tests.loureiro.eng.br 2222

Trying 1.2.3.4...

Connected to gitea.tests.loureiro.eng.br.

Escape character is '^]'.

SSH-2.0-Go

^]

telnet> q

Connection closed.

E pronto! Temos o serviço funcionando e roteado pelo traefik. E podendo fazer ACL de ip do serviço sem precisar do firewall pra isso.

Boa diversão!

Nota: eu coloquei um básico da configuração do gitea pra exemplo. E não funciona se copiar e colar. Pra ter um serviço rodando, terá de adicionar mais algumas partes em environment.