Tirei férias longas demais. Na verdade foram apenas 3 semanas, mas eu meio que deixei de postar aqui durante o período de férias. E a procrastinação voltou forte. Então aqui vamos nós com uma tentativa de voltar a escrever semanalmente.

Hoje, fazendo um troubleshooting the um serviço que não funcionava como devia (um scan de aplicação com o OWASP ZAP), descobri que meus containers em docker não estavam acessando a rede. O que mudou? Minha máquina de trabalho é um Ubuntu 18.04. O repositório bionic-update trouxe uma versão nova do docker que reiniciou o daemon, mas... a parte de rede não funcionando. E só percebi isso hoje.

root@dell-latitude-7480 /u/local# apt show docker.io Package: docker.io Version: 20.10.7-0ubuntu1~18.04.1 Built-Using: glibc (= 2.27-3ubuntu1.2), golang-1.13 (= 1.13.8-1ubuntu1~18.04.3) Priority: optional Section: universe/admin Origin: Ubuntu Maintainer: Ubuntu Developers <This email address is being protected from spambots. You need JavaScript enabled to view it. > Original-Maintainer: Paul Tagliamonte <This email address is being protected from spambots. You need JavaScript enabled to view it. > Bugs: https://bugs.launchpad.net/ubuntu/+filebug Installed-Size: 193 MB Depends: adduser, containerd (>= 1.2.6-0ubuntu1~), iptables, debconf (>= 0.5) | debconf-2.0, libc6 (>= 2.8), libdevmapper1.02.1 (>= 2:1.02.97), libsystemd0 (>= 209~) Recommends: ca-certificates, git, pigz, ubuntu-fan, xz-utils, apparmor Suggests: aufs-tools, btrfs-progs, cgroupfs-mount | cgroup-lite, debootstrap, docker-doc, rinse, zfs-fuse | zfsutils Breaks: docker (<< 1.5~) Replaces: docker (<< 1.5~) Homepage: https://www.docker.com/community-edition Download-Size: 36.9 MB APT-Manual-Installed: yes APT-Sources: mirror://mirrors.ubuntu.com/mirrors.txt bionic-updates/universe amd64 Packages Description: Linux container runtime Docker complements kernel namespacing with a high-level API which operates at the process level. It runs unix processes with strong guarantees of isolation and repeatability across servers. . Docker is a great building block for automating distributed systems: large-scale web deployments, database clusters, continuous deployment systems, private PaaS, service-oriented architectures, etc. . This package contains the daemon and client. Using docker.io on non-amd64 hosts is not supported at this time. Please be careful when using it on anything besides amd64. . Also, note that kernel version 3.8 or above is required for proper operation of the daemon process, and that any lower versions may have subtle and/or glaring issues. N: There is 1 additional record. Please use the '-a' switch to see it

Primeira coisa que tentei foi reiniciar o docker mesmo.

root@dell-latitude-7480 /u/local# systemctl restart --no-block docker; journalctl -u docker -f [...] Aug 12 10:29:25 dell-latitude-7480 dockerd[446605]: time="2021-08-12T10:29:25.203367946+02:00" level=info msg="Firewalld: docker zone already exists, returning" Aug 12 10:29:25 dell-latitude-7480 dockerd[446605]: time="2021-08-12T10:29:25.549158535+02:00" level=warning msg="could not create bridge network for id 88bd200

b5bb27d3fd10d9e8bf86b1947b2190cf7be36cd7243eec55ac8089dc6 bridge name docker0 while booting up from persistent state: Failed to program NAT chain:

ZONE_CONFLICT: 'docker0' already bound to a zone" Aug 12 10:29:25 dell-latitude-7480 dockerd[446605]: time="2021-08-12T10:29:25.596805407+02:00" level=info msg="stopping event stream following graceful shutdown"

error="" module=libcontainerd namespace=moby Aug 12 10:29:25 dell-latitude-7480 dockerd[446605]: time="2021-08-12T10:29:25.596994440+02:00" level=info msg="stopping event stream following graceful shutdown"

error="context canceled" module=libcontainerd namespace=plugins.moby Aug 12 10:29:25 dell-latitude-7480 dockerd[446605]: failed to start daemon: Error initializing network controller: Error creating default "bridge" network:

Failed to program NAT chain: ZONE_CONFLICT: 'docker0' already bound to a zone Aug 12 10:29:25 dell-latitude-7480 systemd[1]: docker.service: Main process exited, code=exited, status=1/FAILURE Aug 12 10:29:25 dell-latitude-7480 systemd[1]: docker.service: Failed with result 'exit-code'. Aug 12 10:29:25 dell-latitude-7480 systemd[1]: Failed to start Docker Application Container Engine. Aug 12 10:29:27 dell-latitude-7480 systemd[1]: docker.service: Service hold-off time over, scheduling restart. Aug 12 10:29:27 dell-latitude-7480 systemd[1]: docker.service: Scheduled restart job, restart counter is at 3. Aug 12 10:29:27 dell-latitude-7480 systemd[1]: Stopped Docker Application Container Engine. Aug 12 10:29:27 dell-latitude-7480 systemd[1]: docker.service: Start request repeated too quickly. Aug 12 10:29:27 dell-latitude-7480 systemd[1]: docker.service: Failed with result 'exit-code'. Aug 12 10:29:27 dell-latitude-7480 systemd[1]: Failed to start Docker Application Container Engine.

As linhas estão editadas pra facilitar a visualização uma vez que o systemd usa linhas bem maiores que 120 colunas. Mas o resultado foi... falha.

Parando o firewalld e somente reiniciando docker levava a uma condição em que o daemon iniciava, mas ao iniciar o container, novamente ficava sem acesso à rede.

root@dell-latitude-7480 /u/local# docker run -it --rm --init ubuntu:20.04 bash root@f45dcbb1ecaa:/# ping 1.1.1.1 PING 1.1.1.1 (1.1.1.1) 56(84) bytes of data. ^C --- 1.1.1.1 ping statistics --- 6 packets transmitted, 0 received, 100% packet loss, time 5153ms root@f45dcbb1ecaa:/# exit

Olhando somente as regras do firewall eu pude ver que realmente o docker estava carregando a regra correta sem o firewalld:

root@dell-latitude-7480 /u/local# systemctl stop firewalld.service

root@dell-latitude-7480 /u/local# iptables -L -n -t nat

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

root@dell-latitude-7480 /u/local# systemctl restart docker

root@dell-latitude-7480 /u/local# systemctl status docker

● docker.service - Docker Application Container Engine

Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: enabled)

Active: active (running) since Thu 2021-08-12 12:01:12 CEST; 4s ago

Docs: https://docs.docker.com

Main PID: 484649 (dockerd)

Tasks: 27

CGroup: /system.slice/docker.service

└─484649 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.061383466+02:00" level=warning msg="Your kernel does not support swap

memory limit"

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.061414030+02:00" level=warning msg="Your kernel does not support CPU

realtime scheduler"

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.061421558+02:00" level=warning msg="Your kernel does not support cgroup

blkio weight"

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.061427194+02:00" level=warning msg="Your kernel does not support cgroup

blkio weight_device"

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.061796106+02:00" level=info msg="Loading containers: start."

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.531851162+02:00" level=info msg="Loading containers: done."

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.549979768+02:00" level=info msg="Docker daemon"

commit="20.10.7-0ubuntu1~18.04.1" graphdriver(s)=overlay2 version=20.10.7

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.550057275+02:00" level=info msg="Daemon has completed initialization"

Aug 12 12:01:12 dell-latitude-7480 dockerd[484649]: time="2021-08-12T12:01:12.558188106+02:00" level=info msg="API listen on /var/run/docker.sock"

Aug 12 12:01:12 dell-latitude-7480 systemd[1]: Started Docker Application Container Engine.

root@dell-latitude-7480 /u/local# iptables -L -n -t nat

Chain PREROUTING (policy ACCEPT)

target prot opt source destination

DOCKER all -- 0.0.0.0/0 0.0.0.0/0 ADDRTYPE match dst-type LOCAL

Chain INPUT (policy ACCEPT)

target prot opt source destination

Chain OUTPUT (policy ACCEPT)

target prot opt source destination

DOCKER all -- 0.0.0.0/0 !127.0.0.0/8 ADDRTYPE match dst-type LOCAL

Chain POSTROUTING (policy ACCEPT)

target prot opt source destination

MASQUERADE all -- 172.16.0.0/24 0.0.0.0/0

Chain DOCKER (2 references)

target prot opt source destination

RETURN all -- 0.0.0.0/0 0.0.0.0/0

Claramente existia uma regra de MASQUERADE vinda da rede do docker (172.16.0.0/24). E o firewalld estava sumindo com essa regra ao ser ativado (pra ficar menos poluído com várias regras peguei só a saída da cadeia do POSTROUTING.

root@dell-latitude-7480 /u/local# systemctl start firewalld.service root@dell-latitude-7480 /u/local# iptables -L POSTROUTING -n -t nat Chain POSTROUTING (policy ACCEPT) target prot opt source destination POSTROUTING_direct all -- 0.0.0.0/0 0.0.0.0/0 POSTROUTING_ZONES_SOURCE all -- 0.0.0.0/0 0.0.0.0/0 POSTROUTING_ZONES all -- 0.0.0.0/0 0.0.0.0/0

A minha primeira ideia: inserir à força uma regra de MASQUERADE direto na cadeia de POSTROUTING.

root@dell-latitude-7480 /u/local# iptables -I POSTROUTING 1 -s 172.16.0.0/24 -j MASQUERADE -t nat root@dell-latitude-7480 /u/local# iptables -L POSTROUTING --line-numbers -t nat Chain POSTROUTING (policy ACCEPT) num target prot opt source destination 1 MASQUERADE all -- 172.16.0.0/24 anywhere 2 POSTROUTING_direct all -- anywhere anywhere 3 POSTROUTING_ZONES_SOURCE all -- anywhere anywhere 4 POSTROUTING_ZONES all -- anywhere anywhere

E, claro, não deu certo.

Depois de procurar na Internet sobre docker e firewalld, encontrei o próprio site do Docker explicando como fazer isso em https://docs.docker.com/network/iptables/ com o seguinte comando:

# Please substitute the appropriate zone and docker interface $ firewall-cmd --zone=trusted --remove-interface=docker0 --permanent $ firewall-cmd --reload

Beleza. Agora não teria como dar errado. E...

root@dell-latitude-7480 /u/local# firewall-cmd --get-zone-of-interface=docker0 public root@dell-latitude-7480 /u/local# firewall-cmd --zone=public --remove-interface=docker0 --permanent The interface is under control of NetworkManager and already bound to the default zone The interface is under control of NetworkManager, setting zone to default. success root@dell-latitude-7480 /u/local# systemctl start docker Job for docker.service failed because the control process exited with error code. See "systemctl status docker.service" and "journalctl -xe" for details.

Caramba... algo de errado não estava certo. Bom... se tivesse funcionado de primeira, eu provavelmente não teria escrito esse artigo.

Então vamos rever em qual zona está a interface docker0, remover essa interface dessa zona e adicionar na zona do docker.

root@dell-latitude-7480 /u/local# firewall-cmd --get-zone-of-interface=docker0 public root@dell-latitude-7480 /u/local# firewall-cmd --zone=public --remove-interface=docker0 --permanent The interface is under control of NetworkManager and already bound to the default zone The interface is under control of NetworkManager, setting zone to default. success root@dell-latitude-7480 /u/local# firewall-cmd --get-zone-of-interface=docker0 public root@dell-latitude-7480 /u/local# firewall-cmd --reload success root@dell-latitude-7480 /u/local# firewall-cmd --get-zone-of-interface=docker0 public

Mas que catzo... esse foi problema que encontrei. Por mais que eu removesse ou tentasse remover a interface docker0 da zone public, sempre voltava.

Foram algumas horas nesse vai e vem, procurando na Internet o que fazer, lendo documentação do firewalld, até que finalmente acertei.

root@dell-latitude-7480 /u/local# firewall-cmd --zone=docker --add-interface=docker0 --permanent The interface is under control of NetworkManager, setting zone to 'docker'. success root@dell-latitude-7480 /u/local# firewall-cmd --get-zone-of-interface=docker0 docker root@dell-latitude-7480 /u/local# firewall-cmd --reload success root@dell-latitude-7480 /u/local# systemctl start docker

Então não precisava do comando pra remover. Apenas adicionar diretamente na zona desejada.

root@dell-latitude-7480 /u/local# docker run -it --rm --init ubuntu:20.04 bash root@e5d78d7f081b:/# ping -c 5 1.1.1.1 PING 1.1.1.1 (1.1.1.1) 56(84) bytes of data. 64 bytes from 1.1.1.1: icmp_seq=1 ttl=58 time=1.95 ms 64 bytes from 1.1.1.1: icmp_seq=2 ttl=58 time=2.02 ms 64 bytes from 1.1.1.1: icmp_seq=3 ttl=58 time=1.68 ms 64 bytes from 1.1.1.1: icmp_seq=4 ttl=58 time=1.62 ms 64 bytes from 1.1.1.1: icmp_seq=5 ttl=58 time=1.76 ms --- 1.1.1.1 ping statistics --- 5 packets transmitted, 5 received, 0% packet loss, time 4003ms rtt min/avg/max/mdev = 1.621/1.808/2.026/0.162 ms root@e5d78d7f081b:/# exit

E a bolha está de pé. Ou quase isso.

A primeira semana em operação foi erro 502 o tempo todo. Achei que o problema era como estava funcionando pelo systemd. Então criei um serviço novo só pra ela.

# /etc/systemd/user/podman-compose@.service

[Unit]

Description=GoToSocial as container service

StartLimitIntervalSec=0

[Service]

Type=simple

User=helio

Group=helio

#WorkingDirectory=/home/helio/gotosocial

ExecStart=/home/helio/gotosocial/entrypoint.sh start

ExecStop=/home/helio/gotosocial/entrypoint.sh stop

Restart=always

RestartSec=30

[Install]

WantedBy=default.target

Depois achei que era o enviroment.

Comentei a parte de WorkingDirectory, como pode ser visto acima.

Também troquei o podman-compose up por esse script entrypoint.sh.

#! /usr/bin/env bash

GOTOSOCIAL_DIR="/home/helio/gotosocial"

start_gotosocial() {

echo "Starting gotosocial"

cd $GOTOSOCIAL_DIR

/usr/bin/podman pull docker.io/superseriousbusiness/gotosocial:latest

/usr/bin/podman pull docker.io/library/postgres:latest

/usr/bin/podman-compose down

sleep 5

/usr/bin/podman-compose up

}

stop_gotosocial() {

echo "Stopping GoToSocial"

cd $GOTOSOCIAL_DIR

/usr/bin/podman-compose down

}

case $1 in

start) start_gotosocial ;;

stop) stop_gotosocial ;;

restart) $0 stop

sleep 30

$0 start

;;

*) echo "Unknown option: $1"

exit 1

esac

Os podman pull estavam antes no serviço do systemd.

Joguei tudo pra dentro do script.

E o resultado foi: 502.

Então comecei a considerar que tinha feito algo errado no compose.yml.

services:

gotosocial:

image: docker.io/superseriousbusiness/gotosocial:latest

container_name: gotosocial

user: 1000:1000

networks:

- gotosocial

environment:

# Change this to your actual host value.

GTS_HOST: bolha.linux-br.org

GTS_DB_TYPE: postgres

GTS_CONFIG_PATH: /gotosocial/config.yaml

# Path in the GtS Docker container where the

# Wazero compilation cache will be stored.

GTS_WAZERO_COMPILATION_CACHE: /gotosocial/.cache

## For reverse proxy setups:

GTS_TRUSTED_PROXIES: "127.0.0.1,::1,172.18.0.0/16"

## Set the timezone of your server:

TZ: Europe/Stockholm

ports:

- "127.0.0.1:8080:8080"

volumes:

- data:/gotosocial/storage

- cache:/gotosocial/.cache

- ~/gotosocial/config.yaml:/gotosocial/config.yaml

restart: unless-stopped

healthcheck:

test: wget --no-vebose --tries=1 --spider http://localhost:8080/readyz

interval: 10s

retries: 5

start_period: 30s

depends:

- postgres

postgres:

image: docker.io/library/postgres:latest

container_name: postgres

networks:

- gotosocial

environment:

POSTGRES_PASSWORD: *****

POSTGRES_USER: gotosocial

POSTGRES_DB: gotosocial

restart: unless-stopped

volumes:

- ~/gotosocial/postgresql:/var/lib/postgresql

ports:

- "5432:5432"

healthcheck:

test: pg_isready

interval: 10s

timeout: 5s

retries: 5

start_period: 120s

networks:

gotosocial:

ipam:

driver: default

config:

- subnet: "172.18.0.0/16"

gateway: "172.18.0.1"

volumes:

data:

cache:

Nada de muito fantástico. Um postgres rodando junto com um gotosocial. Algumas configurações de proxy, que é o nginx da máquina, e é isso. E continuava o 502.

Mas se eu entrava na máquina, e rodava uma sessão de tmux e dentro dela chamava o podman-compose up,

daí tudo funcionava.

Dei então uma olhada no erro.

Oct 15 10:16:56 mimir entrypoint.sh[1895291]: podman-compose version: 1.0.6

Oct 15 10:16:56 mimir entrypoint.sh[1895291]: ['podman', '--version', '']

Oct 15 10:16:56 mimir entrypoint.sh[1895291]: using podman version: 4.9.3

Oct 15 10:16:56 mimir entrypoint.sh[1895291]: ** excluding: set()

Oct 15 10:16:56 mimir entrypoint.sh[1895291]: ['podman', 'ps', '--filter', 'label=io.podman.compose.project=gotosocial', '-a', '--format', '{{ index .Labels "io.podman.compose.config-hash"}}']

Oct 15 10:16:56 mimir entrypoint.sh[1895303]: time="2025-10-15T10:16:56+02:00" level=warning msg="RunRoot is pointing to a path (/run/user/1000/containers) which is not writable. Most likely podman will fail."

Oct 15 10:16:56 mimir entrypoint.sh[1895303]: Error: default OCI runtime "crun" not found: invalid argument

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: Traceback (most recent call last):

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: File "/usr/bin/podman-compose", line 33, in

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: sys.exit(load_entry_point('podman-compose==1.0.6', 'console_scripts', 'podman-compose')())

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: File "/usr/lib/python3/dist-packages/podman_compose.py", line 2941, in main

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: podman_compose.run()

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: File "/usr/lib/python3/dist-packages/podman_compose.py", line 1423, in run

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: cmd(self, args)

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: File "/usr/lib/python3/dist-packages/podman_compose.py", line 1754, in wrapped

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: return func(*args, **kw)

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: ^^^^^^^^^^^^^^^^^

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: File "/usr/lib/python3/dist-packages/podman_compose.py", line 2038, in compose_up

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: compose.podman.output(

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: File "/usr/lib/python3/dist-packages/podman_compose.py", line 1098, in output

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: return subprocess.check_output(cmd_ls)

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: File "/usr/lib/python3.12/subprocess.py", line 466, in check_output

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: return run(*popenargs, stdout=PIPE, timeout=timeout, check=True,

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: File "/usr/lib/python3.12/subprocess.py", line 571, in run

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: raise CalledProcessError(retcode, process.args,

Oct 15 10:16:57 mimir entrypoint.sh[1895291]: subprocess.CalledProcessError: Command '['podman', 'ps', '--filter', 'label=io.podman.compose.project=gotosocial', '-a', '--format', '{{ index .Labels "io.podman.compose.config-hash"}}']' returned non-zero exit status 125.

Oct 15 10:16:57 mimir systemd[1]: gotosocial.service: Main process exited, code=exited, status=1/FAILURE

Oct 15 10:16:57 mimir systemd[1]: gotosocial.service: Failed with result 'exit-code'.

Oct 15 10:16:57 mimir systemd[1]: gotosocial.service: Consumed 1.481s CPU time.

Oct 15 10:17:27 mimir systemd[1]: gotosocial.service: Scheduled restart job, restart counter is at 1280.

Oct 15 10:17:27 mimir systemd[1]: Started gotosocial.service - GoToSocial as container service.

Oct 15 10:17:27 mimir entrypoint.sh[1895707]: Starting gotosocial

Oct 15 10:17:30 mimir entrypoint.sh[1895781]: podman-compose version: 1.0.6

Oct 15 10:17:30 mimir entrypoint.sh[1895781]: ['podman', '--version', '']

Oct 15 10:17:30 mimir entrypoint.sh[1895781]: using podman version: 4.9.3

Oct 15 10:17:30 mimir entrypoint.sh[1895781]: ** excluding: set()

Oct 15 10:17:30 mimir entrypoint.sh[1895781]: podman stop -t 10 postgres

Oct 15 10:17:31 mimir entrypoint.sh[1895781]: exit code: 0

Oct 15 10:17:31 mimir entrypoint.sh[1895781]: podman stop -t 10 gotosocial

Oct 15 10:17:31 mimir entrypoint.sh[1895781]: exit code: 0

Oct 15 10:17:31 mimir entrypoint.sh[1895781]: podman rm postgres

Oct 15 10:17:31 mimir entrypoint.sh[1895781]: exit code: 0

Oct 15 10:17:31 mimir entrypoint.sh[1895781]: podman rm gotosocial

Oct 15 10:17:31 mimir entrypoint.sh[1895781]: exit code: 0

Oct 15 10:17:36 mimir entrypoint.sh[1895920]: podman-compose version: 1.0.6

Oct 15 10:17:36 mimir entrypoint.sh[1895920]: ['podman', '--version', '']

Oct 15 10:17:36 mimir entrypoint.sh[1895920]: using podman version: 4.9.3

Oct 15 10:17:36 mimir entrypoint.sh[1895920]: ** excluding: set()

Oct 15 10:17:36 mimir entrypoint.sh[1895920]: ['podman', 'ps', '--filter', 'label=io.podman.compose.project=gotosocial', '-a', '--format', '{{ index .Labels "io.podman.compose.config-hash"}}']

Oct 15 10:17:36 mimir entrypoint.sh[1895920]: podman volume inspect gotosocial_data || podman volume create gotosocial_data

Oct 15 10:17:36 mimir entrypoint.sh[1895920]: ['podman', 'volume', 'inspect', 'gotosocial_data']

Oct 15 10:17:36 mimir entrypoint.sh[1895920]: podman volume inspect gotosocial_cache || podman volume create gotosocial_cache

Oct 15 10:17:36 mimir entrypoint.sh[1895920]: ['podman', 'volume', 'inspect', 'gotosocial_cache']

Oct 15 10:17:36 mimir entrypoint.sh[1895920]: ['podman', 'network', 'exists', 'gotosocial_gotosocial']

Oct 15 10:17:36 mimir entrypoint.sh[1895920]: podman create --name=gotosocial --label io.podman.compose.config-hash=4f4b10e0c67c04b7b4f2392784b378735d4378d9d411f1405cf3819c6207bd1a --label io.podman.compose.project=gotosocial --label io.podman.compose.version=1.0.6 --label PODMAN_SYSTEMD_UNIT=This email address is being protected from spambots. You need JavaScript enabled to view it. --label com.docker.compose.project=gotosocial --label com.docker.compose.project.working_dir=/home/helio/gotosocial --label com.docker.compose.project.config_files=compose.yaml --label com.docker.compose.container-number=1 --label com.docker.compose.service=gotosocial -e GTS_HOST=bolha.linux-br.org -e GTS_DB_TYPE=postgres -e GTS_CONFIG_PATH=/gotosocial/config.yaml -e GTS_WAZERO_COMPILATION_CACHE=/gotosocial/.cache -e GTS_TRUSTED_PROXIES=127.0.0.1,::1,172.18.0.0/16 -e TZ=Europe/Stockholm -v gotosocial_data:/gotosocial/storage -v gotosocial_cache:/gotosocial/.cache -v /home/helio/gotosocial/config.yaml:/gotosocial/config.yaml --net gotosocial_gotosocial --network-alias gotosocial -p 127.0.0.1:8080:8080 -u 1000:1000 --restart unless-stopped --healthcheck-command /bin/sh -c 'wget --no-vebose --tries=1 --spider http://localhost:8080/readyz' --healthcheck-interval 10s --healthcheck-start-period 30s --healthcheck-retries 5 docker.io/superseriousbusiness/gotosocial:latest

Oct 15 10:17:36 mimir entrypoint.sh[1895920]: exit code: 0

A parte final, com podman create, é o systemd reiniciando o serviço.

O problema está on início, onde há um crash de python:

subprocess.CalledProcessError: Command '['podman', 'ps', '--filter', 'label=io.podman.compose.project=gotosocial', '-a', '--format', '{{ index .Labels "io.podman.compose.config-hash"}}']' returned non-zero exit status 125.

Eu entrava na máquina e rodava o comando pra ver o resultado:

❯ podman ps --filter 'label=io.podman.compose.project=gotosocial' -a --format '{{ index .Labels "io.podman.compose.config-hash"}}'

4f4b10e0c67c04b7b4f2392784b378735d4378d9d411f1405cf3819c6207bd1a

4f4b10e0c67c04b7b4f2392784b378735d4378d9d411f1405cf3819c6207bd1a

E mostrava os containers rodando (porque tinha sido reiniciados pelo systemd). Eu ficava com aquela cara de "ué!?".

No início do erro, tem essa outra mensagem aqui:

Error: default OCI runtime "crun" not found: invalid argument

.

Então fui olhar se era algum problema nesse crun.

E está instalado (acho que veio como dependência do podman.

❯ which crun

/usr/bin/crun

❯ dpkg -S /usr/bin/crun

crun: /usr/bin/crun

Busquei sobre erros do GoToSocial mesmo. E nada.

Olhando pra todo lado tentando descobrir o que poderia ser, reparei em outro erro:

msg="RunRoot is pointing to a path (/run/user/1000/containers) which is not writable. Most likely podman will fail."

.

Isso soou promissor.

Então de repente o pointing path não estava disponível pra escrita.

Poderia ser... systemd?

Com isso eu comecei a buscar algo relacionado com timeout ou user logout.

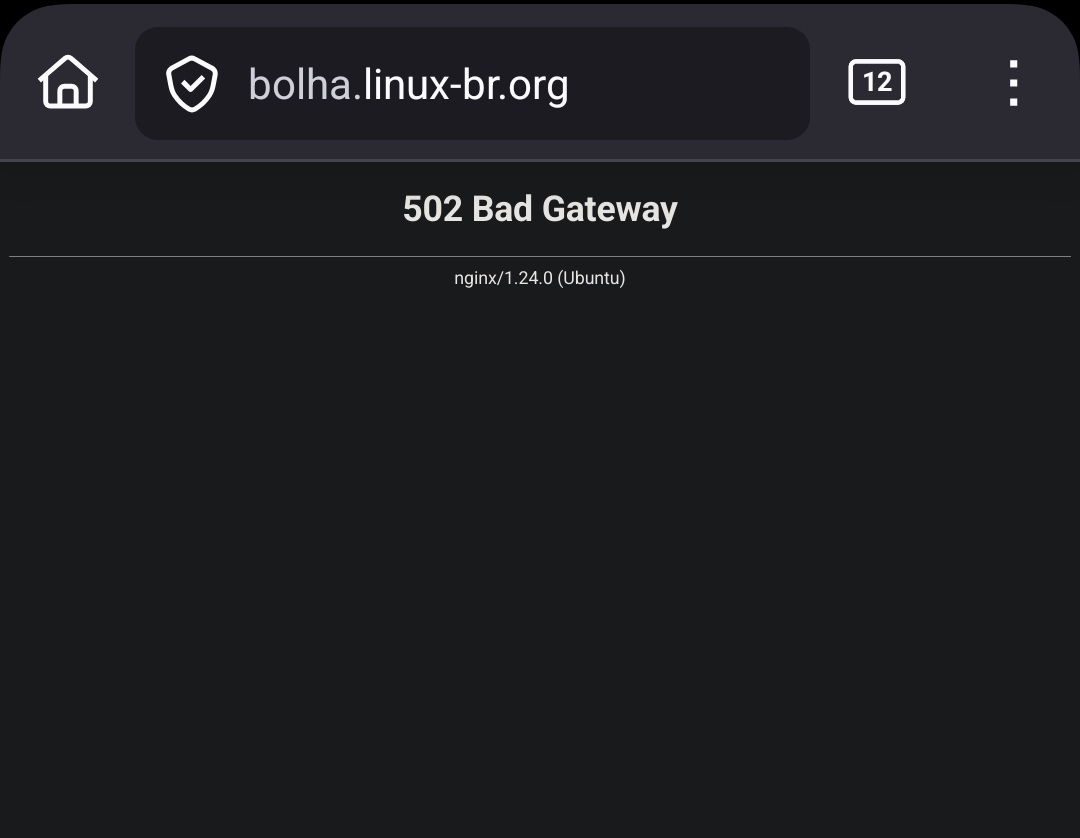

Acabei encontrando o artigo abaixo:

Nesse artigo alguém comenta que pode ser uma opção de container linger.

Segui a referência que tinha sobre isso.

loginctl?

Faz até sentido isso.

Mas o podman não deveria descrever isso na documentação?

Então fui buscar e achei isso aqui:

Pra deixar bem ilustrado onde aparece a referência de linger na documentação:

Algo que é vital pra funcionar como serviço aparece como... exemplo??? Os caras tão de brincation uite me.

Mas no fim era isso mesmo.

Bastou um sudo logictl enable-user helio pra ter o container rodando depois que eu saio da sessão.

Se eu tivesse decido rodar com docker compose, eu provavelmente não teria o mesmo problema uma vez que roda com o privilégio de root.

Então fica mais essa lição aqui.

E mesmo tendo lendo a documentação, sempre aparecem alguns pontos que a porra da documentação só dá um peteleco em cima e dentro dos exemplos ainda por cima.

Mas está funcionando. Minha bolha, bolha minha.